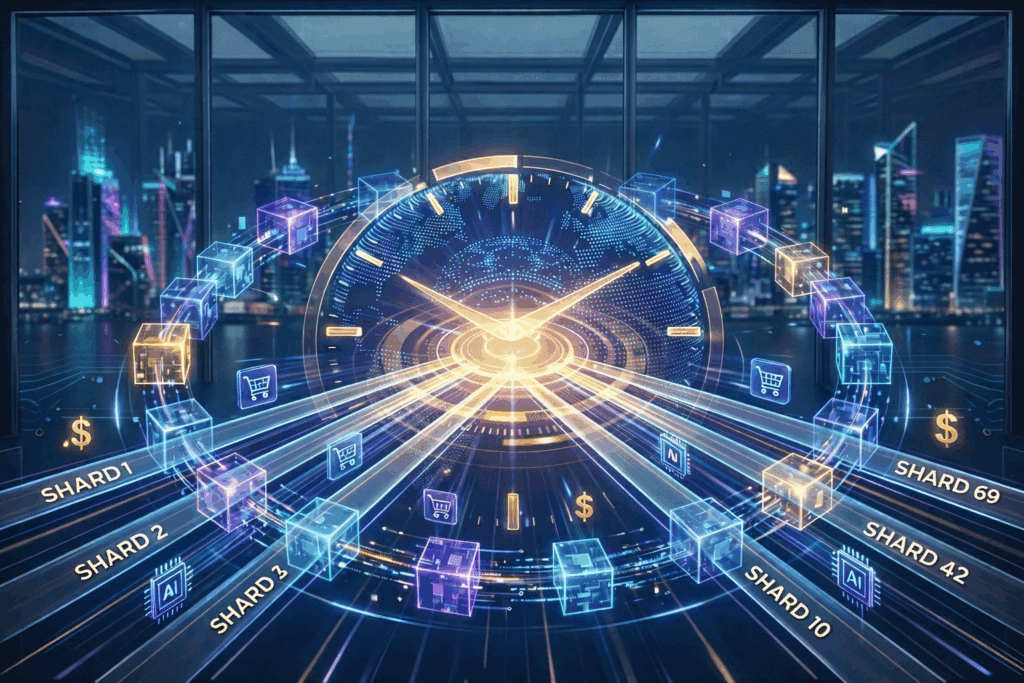

Today we are beginning the rollout of the first phase of the ASIMOV platform, a state-of-the-art polyglot development platform for trustworthy, neurosymbolic AI. The platform in this first public release is capable of turning any URL into a semantic knowledge graph for use with large language models—”neurosymbolic” here meaning exactly the combination of neural networks with symbolic AI—while preserving and providing cryptographic attestations regarding the provenance of the information.

The platform is fully modular, enabling anybody to develop ASIMOV modules to unlock and utilize any particular data source, whether private or public, local or remote. The platform is implemented using the safe Rust programming language, and we will be making available extensive command-line tooling as well as initial software development kits for Python, Ruby, Rust, and TypeScript—these languages combined covering about 80% of the software engineering market. Many aspects of the platform, including its software architecture, are novel and without precedent in open-source form. Read on for a brief overview!

The Name for Trustworthy AI

It goes without saying that a project named ASIMOV could not discuss trustworthy machine intelligence without a mention and homage to Isaac Asimov‘s pioneering Three Laws of Robotics:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law

Isaac Asimov not only invented robotics and shaped robots as we know them, but his substantial body of work created path dependence that implicitly and ultimately frames all modern thinking on robots, especially humanoid robots. He also did some of the earliest systematic thinking on the safety and steerability of robots and artificial intelligence, with his work thus laying conceptual groundwork for what later developed into AI safety and alignment research.

In honor of Isaac Asimov’s legacy, we mean to establish ASIMOV as a global brand synonymous with trustworthy machine intelligence. There truly could not be any better name for trustworthy AI.

What is Trustworthy AI?

What would it mean for an AI system to be trustworthy? For all their other utility, certainly none of the incumbents today could be said to have reached such a high watermark: current AI systems are fundamentally afflicted by confabulations (aka hallucinations) and frequently go off the rails even in casual daily use. Current AI systems are quite unable to provide genuine rationale for the answers they give—any ostensible rationale will but itself be a confabulation. They are unable to attest, much less prove, the provenance of any clump of unstructured data in the static knowledge soup encoded into the bulk of the model’s weights.

Indeed, current AI systems are black boxes in the worst sense, neither introspectable nor auditable. We may derive some benefit from them, but we all as users of the current generation of systems have certainly already long since learned that it is also necessary to double-check factual answers provided by the model; and this introduces significant friction and risk into scenarios where otherwise such models could be deployed to automate some process. Moreover, current AI systems lack any means to automatically and reliably fact-check a model’s answers beyond haphazardly crawling and scraping random web pages.

To make matters worse, most users of AI today are also beholden to cloud-based AI vendors that are incompatible with privacy and have—unsurprisingly—already been known to have leaked private and confidential conversations to third parties.

A Platform for Trustworthy AI

The aforementioned challenges point the way to a few cornerstones of what trustworthy AI could mean: for starters, certainly trustworthy machine intelligence ought to be based on an explicit, symbolic knowledge base instead of the current implicit, unstructured knowledge soup. All we really require from a large language model itself is human-like language understanding and reasoning combined with just enough tacit knowledge so as to solve the commonsense problem that plagued AI systems of previous eras. (That is, proper language understanding necessarily requires some amount of commonsense knowledge.) Anything much beyond these features is dead weight that is both immediately outdated as well as tremendously expensive to update.

It follows that trustworthy AI systems ought to have some introspective ability to access and manipulate their knowledge base. They ought to be able to explain their answers and reasoning with reference to this knowledge base, grounding any claims in cryptographically-verified provenance metadata stored alongside every assertion in said knowledge base. And this knowledge base ought to be bitemporally revisioned—supporting both validity time and transaction time—so as to guarantee retrospective auditability. All of these challenges and concerns are matters we are tackling within the scope of ASIMOV.

An Algebra for Knowledge

What concrete form might such a knowledge base take? The current prevalent approaches mirror the contemporary plain-text input/output characteristics of large language models, as well as the practices of the context-engineering community around them: projects are most typically representing knowledge just using natural language in Markdown documents.

But where else in computer science do we deal with plain text in and plain text out? Even parsers map input bytes to tokens (lexical analysis) and then to concepts (syntactic and semantic analysis); they didn’t stop at mere tokens. Much in the same vein, we believe that it is still early days for LLMs and current practice will evolve to marry a token-based frontend with a concept-based backend. (It will be preferable to reason in concepts instead of tokens.)

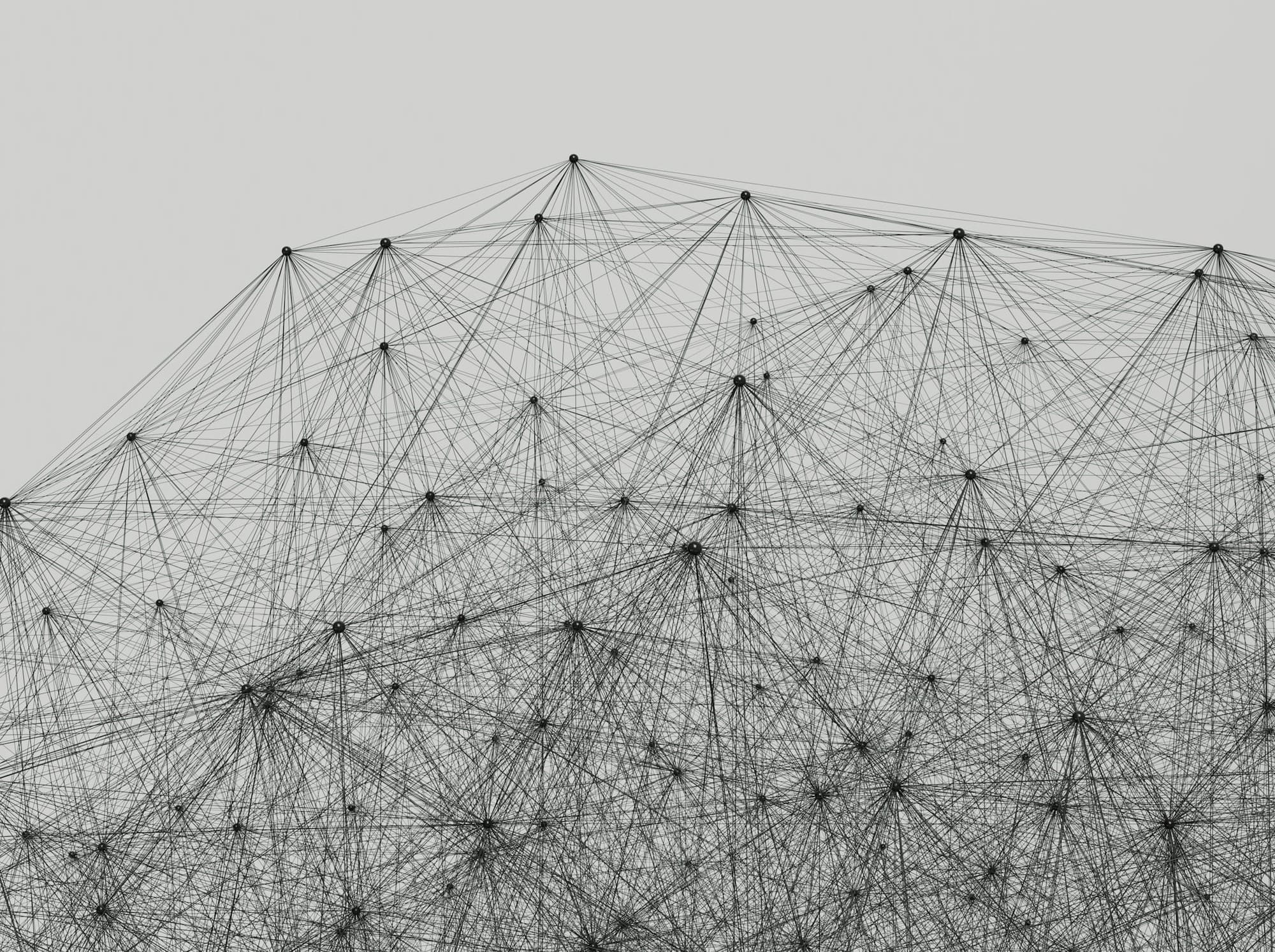

Unfamiliar though it may as yet be to many practitioners today, knowledge representation & reasoning (KR&R) is a long-established discipline and there indeed exists an excellent way to represent AIs’ knowledge bases using concepts instead of tokens. This approach is centered around knowledge graphs.

Knowledge graphs are a universal data model—meaning they subsume and are able to represent any other data model—with an extensive research literature going back decades and with well-defined international standards that codify and guarantee their interoperability. In the form of JSON-LD, it is easy to retrofit any existing system to import or export semantically-rich structured data that represent a knowledge graph.

In short, one of the remarkable aspects of knowledge graphs is that a graph plus a graph is still a graph. This is a tremendously desirable quality for breaking open the data silos that characterize our splintered, captive digital identities and digital lives today. One of the use cases for ASIMOV that we’re really passionate about supporting is the building of a personal intelligence layer that brings into conjunction all the information in your life, enabling actionable insights that would otherwise be impossible. In a sense, a secure personal data warehouse accessible to your personal AI.

A High-Assurance Language

Another aspect of the trustworthiness of AI systems concerns the programming languages those systems are implemented in. We have chosen to build using the high-assurance, memory-safe systems programming language Rust. This deliberately bucks a trend that has seen most AI developer frameworks to date implemented in Python and/or TypeScript, and lower-level layers (including inference engines) implemented in C++.

Building with Rust instead of C++ means that ASIMOV is invulnerable to whole classes of severe software defects and security vulnerabilities that plague software systems implemented in other languages (see CVE-2024-42479 for just one representative example.)

Beyond the top-notch safety characteristics of Rust, the language has several other notable things going for it. For one, it has topped “the most admired programming language” for now a consecutive 9 years in a row in Stack Overflow’s annual developer survey, which is an unprecedented feat that also means Rust developers are rather passionate about programming specifically in Rust. For another, the Rust compiler produces high-performance static executables; more on the implications of that below.

A Platform for Polyglots, by Polyglots

Our aim is to have ASIMOV become standard tooling available on every operating system and ultimately accessible from every programming language. This means we are going full polyglot, supporting a wide variety of programming languages instead of just one or two (typically these days, Python and/or TypeScript). Instead of forced adherence to the monoculture du jour, we will enable everyone to be able to use their programming language of choice in building their own AI systems.

This ambition is facilitated by Rust’s tremendously good cross-compilation toolchain which empowers us to produce turnkey static binaries that simply work everywhere (and which will keep on working for decades to come) without the usual dependency hell that characterizes building large C++ systems in particular.

Free and Unencumbered Software

ASIMOV is the first AI platform that is 100% free and unencumbered software placed into the public domain with no strings attached. This means you may copy, reuse, and remix our code without any legal requirement for even attribution. We believe this will tremendously aid in the distribution and mindshare of the platform, quickly enough making ASIMOV the standard reference implementation—both in industry and academia, not to mention for code generation by AI—for any future project building along similar lines.

Follow Us on the Journey!

If all the preceding sounds intriguing, follow our platform updates at @ASIMOV_Platform on X and @asimov-platform on GitHub, and subscribe to this blog to be notified about upcoming in-depth technical material in the coming weeks!

Updated: October 20, 2025

ASIMOV is a good project!

🧑 100% userTrustworthy AI means having AI systems that are transparent, reliable, and accountable. They should be able to provide clear explanations for their answers and verify the accuracy of their responses. What concerns me is that current AI systems are like black boxes – we can't see inside them or trust their outputs without double-checking. I'm worried about the risks of relying on these systems, especially when it comes to sensitive information. Can we really trust AI systems that have already leaked private conversations? The answer is obvious – NO!

🧑 10% user, 🤖 90% AI