What is an AI agent?

An AI agent is an autonomous software entity designed to perform tasks by perceiving its environment, processing information, and taking actions to achieve specific goals. An AI agent typically comprises three core components:

- Intelligence: The large language model (LLM) that drives the agent’s cognitive capabilities, enabling it to understand and generate human-like text. This component is usually guided by a system prompt that defines the agent’s goals and the constraints it must follow.

- Knowledge: The domain-specific expertise and data that the agent leverages to make informed decisions and take action. Agents utilize this knowledge base as context, drawing on past experiences and relevant data to guide their choices.

- Tools: A suite of specialized tools that extend the agent’s abilities, allowing it to efficiently handle a variety of tasks. These tools can include API calls, executable code, or other services that enable the agent to complete its assigned tasks.

What are the three core components of an AI agent?

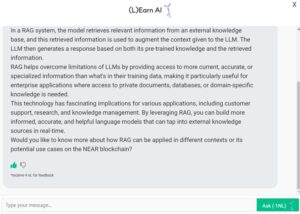

What is RAG?

Retrieval-Augmented Generation (RAG) is an AI technique that enhances large language models (LLMs) by integrating relevant information from external knowledge bases. Through semantic similarity calculations, RAG retrieves document chunks from a vector database, where these documents are stored as vector representations. This process reduces the generation of factually incorrect content, significantly improving the reliability of LLM outputs.\cite{RAG}

A RAG system consists of two core components: the vector database and the retriever. The vector database holds document chunks in vector form, while the retriever calculates semantic similarity between these chunks and user queries. The more similar a chunk is to the query, the more relevant it is considered, and it is then included as context for the LLM. This setup allows RAG to dynamically update an LLM’s knowledge base without the need for retraining, effectively addressing knowledge gaps in the model’s training data.

The RAG pipeline operates by augmenting a user’s prompt with the most relevant retrieved text. The retriever fetches the necessary information from the vector database and injects it into the prompt, providing the LLM with additional context. This process not only enhances the accuracy and relevance of responses but also makes RAG a crucial technology in enabling AI agents to work with real-time data, making them more adaptable and effective in practical applications.

How does Retrieval-Augmented Generation (RAG) improve LLM responses?

What is Agent Memory?

AI agents, by default, are designed to remember only the current workflow, with their memory typically constrained by a maximum token limit. This means they can retain context temporarily within a session, but once the session ends or the token limit is reached, the context is lost. Achieving long-term memory across workflows—and sometimes even across different users or organizations—requires a more sophisticated approach. This involves explicitly committing important information to memory and retrieving it when needed.

Agent Memory with blockchain:

XTrace – A Secure AI Agent Knowledge & Memory Protocol for Collective Intelligence – will leverage blockchain as the permission and integrity layer for agent memory, ensuring that only the agent’s owner has access to stored knowledge. Blockchain is especially useful for this long persistent storage as XTrace provides commitment proof for the integrity of both the data layer and integrity of the retrieval process. The agent memory will be securely stored within XTrace’s privacy-preserving RAG framework, enabling privacy, portability and sharability. This approach provides several key use cases:

Stateful Decentralized Autonomous Agents:

- XTrace can act as a reliable data availability layer for autonomous agents operating within Trusted Execution Environments (TEEs). Even if a TEE instance goes offline or if users want to transfer knowledge acquired by the agents, they can seamlessly spawn new agents with the stored network, ensuring continuity and operational resilience.

XTrace Agent Collaborative Network:

- XTrace enables AI agents to access and inherit knowledge from other agents within the network, fostering seamless collaboration and eliminating redundant processing. This shared memory system allows agents to collectively improve decision-making and problem-solving capabilities without compromising data ownership or privacy.

XTrace Agent Sandbox Test:

- XTrace provides a secure sandbox environment for AI agent developers to safely test and deploy their agents. This sandbox acts as a honeypot to detect and mitigate prompt injection attacks before agents are deployed in real-world applications. Users can define AI guardrails within XTrace, such as restricting agents from mentioning competitor names, discussing political topics, or leaking sensitive key phrases. These guardrails can be enforced through smart contracts, allowing external parties to challenge the agents with potentially malicious prompts. If a prompt successfully bypasses the defined safeguards, the smart contract can trigger a bounty release, incentivizing adversarial testing. Unlike conventional approaches, XTrace agents retain memory of past attack attempts, enabling them to autonomously learn and adapt to new threats over time. Following the sandbox testing phase, agents carry forward a comprehensive memory of detected malicious prompts, enhancing their resilience against similar attacks in future deployments.

How to create a Personalized AI agent?

To create an AI agent with XTrace, there are three main steps to follow:

- Define the Purpose: Determine the specific tasks and goals the agent will accomplish.

- Choose the AI Model: Select a suitable LLM or other machine learning models that align with the agent’s requirements.

- Gather and Structure Knowledge: Collect domain-specific data and organize it in a way that the agent can efficiently use.

- Develop Tools and Integrations: Incorporate APIs, databases, or other services that the agent may need to interact with.

How to create a Private Personalized AI agent with XTrace?

XTrace can serve as the data connection layer between the user and the AI agents. Users will be able to securely share data from various apps into the system to create an AI agent that is aware of the user’s system. By leveraging XTrace’s encrypted storage and access control mechanisms, AI agents can be personalized without compromising user privacy. Key features include:

- Seamless Data Integration: Aggregating data from multiple sources securely.

- Granular Access Control: Ensuring only authorized AI agents can access specific data.

- Privacy-Preserving Computation: Enabling AI agents to learn from user data without exposing it.

- Automated Insights: Leveraging AI to provide personalized recommendations based on securely stored data.

- User Ownership: Empowering users with full control over their data and how it is used.

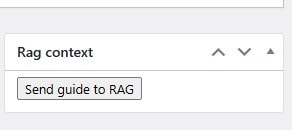

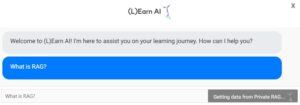

How do we use XTrace private RAG for (L)Earn AI🕺?

- We send learning materials in LLM friendly format to LNC RAG at XTrace

- Once (L)Earn AI🕺 gets the question, first it talks to private RAG and retrieve relevant information

- The LLM hosted at NEAR AI infrastructure generates a response based on both its pre-trained knowledge and the retrieved information!

- Learners are encouraged to provide feedback and get 4nLEARNs to improve (L)Earn AI🕺 to work better for NEAR community!

Updated: February 24, 2025

Top comment

The XTrace can serve as the data connection layer, facilitating communication between users and AI agents.

I'm excited to see how XTrace private RAG is being utilized to enhance the (L)Earn AI experience! The idea of sending learning materials in an LLM-friendly format to the RAG and then retrieving relevant information to inform AI responses is genius. I'm curious to know more about the type of feedback learners are encouraged to provide and how that feedback is used to improve the AI. Is there a way to track the progress and effectiveness of the 4nLEARNs system? Additionally, how does the NEAR community plan to expand the capabilities of (L)Earn AI in the future?

This explanation of how XTrace private RAG is used for (L)Earn AI is fascinating! I love how the process involves a seamless collaboration between the LNC RAG, private RAG, and the LLM hosted on NEAR AI infrastructure. The fact that learners can provide feedback and earn 4nLEARNs to improve the AI is a great incentive to encourage community engagement. I'm curious to know more about how the feedback mechanism works and how it impacts the AI's performance over time. Can anyone share more insights on this?

I love how (L)Earn AI is leveraging XTrace private RAG to provide personalized learning experiences for the NEAR community! By combining pre-trained knowledge with real-time information retrieved from the private RAG, (L)Earn AI is able to generate highly relevant responses. I'm curious to know more about the 4nLEARNs feedback system – how does it ensure that the feedback is accurate and actionable? Additionally, what kind of learning materials are being sent to the LNC RAG, and are there plans to expand the scope of topics covered?

I'm really excited about the potential of XTrace to create personalized AI agents that prioritize user privacy. The granular access control feature is particularly interesting, as it ensures that AI agents only access the data they need to, reducing the risk of privacy breaches. I'd love to see more examples of how XTrace can be used in real-world scenarios, such as personalized health recommendations or tailored financial planning. How do you envision XTrace being used in industries beyond tech, such as healthcare or education? Can't wait to see the possibilities unfold!

This article has opened my eyes to the potential of Retrieval-Augmented Generation (RAG) in revolutionizing the way large language models operate. By integrating external knowledge bases and dynamically updating an LLM's knowledge base, RAG addresses a crucial limitation of traditional language models – their inability to adapt to new information. I'm curious to know more about the possibilities of applying RAG to real-world scenarios, such as customer service chatbots or language translation tools. How might RAG improve the accuracy and relevance of responses in these contexts? Additionally, what are the potential limitations or biases of relying on external knowledge bases, and how can these be mitigated?

This is fascinating! I'm excited to see how XTrace private RAG and (L)Earn AI can work together to provide personalized learning experiences for the NEAR community. I'm curious to know more about what kind of feedback learners can provide to improve the AI, and how the 4nLEARN system will incentivize engagement. Moreover, I wonder if there are plans to integrate this technology with existing education platforms or create a standalone learning environment. Can't wait to see the impact this will have on democratizing access to knowledge and upskilling the community!

Fascinating read! The breakdown of an AI agent into Intelligence, Knowledge, and Tools components really helps to clarify the inner workings of these autonomous entities. I'm curious, though – how do these components interact and adapt to new situations? For instance, if an AI agent's knowledge base is outdated or incomplete, can it still make informed decisions, or would it require human intervention to correct its understanding? Additionally, what kind of safeguards are in place to ensure these agents operate within their defined constraints and don't stray into unintended territories?

I'm fascinated by the potential of RAG to revolutionize the way large language models interact with external knowledge bases. By dynamically updating an LLM's knowledge base without retraining, RAG addresses a major pain point in AI development. I'm curious to know more about the limitations of this approach – how does RAG handle conflicting or outdated information in the vector database? Additionally, as RAG becomes more prevalent, how will we ensure that the sources used to populate the vector database are credible and diverse? Can anyone share experiences or insights on implementing RAG in real-world applications?

This highlights a crucial limitation of current AI agents – their inability to retain long-term memory. It's fascinating that achieving persistent memory across workflows and users requires a deliberate effort to commit important information. I'm curious, what are the implications of this limitation in real-world applications, such as customer service chatbots or personal assistants? How can we ensure that AI agents learn from past interactions and adapt to individual users' preferences and needs without relying on short-term context? The potential for AI to revolutionize human-computer interaction hinges on overcoming this memory hurdle.

I'm fascinated by the potential of RAG to revolutionize the accuracy and reliability of large language models. By integrating external knowledge bases and dynamically updating the model's knowledge without retraining, RAG seems to address the long-standing issue of knowledge gaps in AI systems. I'm curious to know more about the limitations of RAG, such as the quality of the vector database and the risk of biases in the retrieved information. How do the developers ensure that the retrieved chunks are diverse and representative of different perspectives? I'd love to see more research on these aspects to fully harness the power of RAG in real-world applications.

This explanation of RAG has opened my eyes to the potential of AI language models to provide accurate and reliable information. I'm impressed by how RAG can dynamically update an LLM's knowledge base without retraining, addressing knowledge gaps in the process. It's fascinating to think about the possibilities of RAG in real-world applications, such as chatbots and virtual assistants. However, I do wonder about the curated nature of the vector database and how it might influence the types of information that are retrieved and presented. For instance, what steps are taken to ensure diversity and representation in the database? Looking forward to seeing further developments in this technology!

Fascinating topic! I never realized how limited AI agents' memory capabilities were. The idea that they can only retain context temporarily within a session is quite restrictive. I'm curious to know more about the approaches being developed to enable long-term memory across workflows and users. How do they ensure the accuracy and relevance of the committed information, especially when it comes to multi-user or multi-organizational contexts? Are there any potential applications or benefits of this technology in fields like customer service or healthcare, where context retention can be crucial?