More of our lives now run on phones, laptops, and the cloud. That makes protecting secrets harder. A Trusted Execution Environment (TEE) helps by acting like a small safe room inside a computer chip. Only approved code gets in. What happens inside stays private and intact. In three minutes, this guide explains what a TEE is, why it is trusted, where it is used, and how to use it wisely.

What is a TEE?

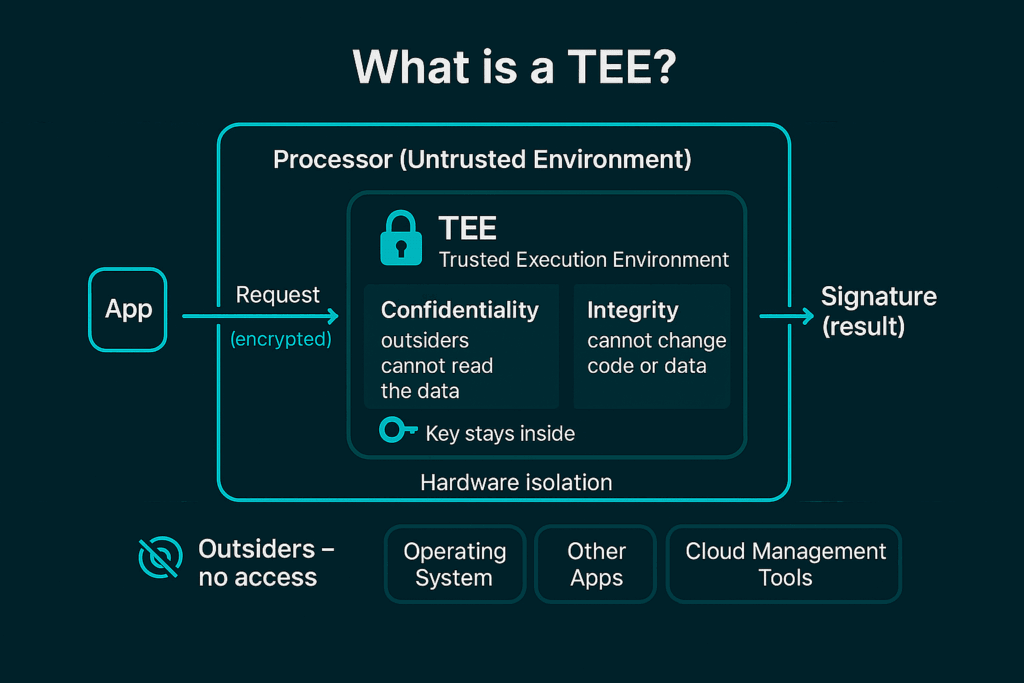

Think of a locked, soundproof room inside the processor. That room is the TEE. It gives two strong promises:

-

Confidentiality: outsiders cannot read the data.

-

Integrity: outsiders cannot change the code or data.

“Outsiders” includes the operating system, other apps, and cloud management tools. Hardware isolation enforces these rules, so normal software attacks have a hard time getting in.

Quick scenario: your app needs to check a private key and sign a message. Inside the TEE, the key never leaves the room. The signature is produced, but the key stays hidden.

Why you can trust it

TEEs layer several controls:

-

Secure boot: at startup, the TEE verifies digital signatures on its own code and its tiny secure OS. If checks fail, it does not run.

-

Approved apps only: the TEE verifies that an app is authorized before it starts.

-

Strong isolation: multiple trusted apps can share the host, yet each sees only its own data.

-

Confidential memory: TEE memory is encrypted and hidden from the main OS and hypervisor.

-

Remote attestation: the TEE can produce a cryptographic proof of the exact software that is running so a remote user can verify it.

Where TEEs show up

TEEs power confidential computing, which keeps data protected even while it is being processed.

-

Mobile security: store biometrics and payment keys in a protected zone.

-

Cloud computing: run workloads in confidential virtual machines. For example, Azure confidential computing uses AMD SEV-SNP and Intel TDX to encrypt VM memory, and Intel SGX for enclave-style apps that need strong isolation without large code changes.

-

IoT devices: protect firmware updates and secrets across fleets of sensors.

-

Blockchain systems: process private orders, healthcare, or finance data without exposing it to node operators. This enables private order books and private AI agent runs.

-

Cryptocurrency wallets: keep private keys and signing logic inside the TEE so keys never leave the secure boundary.

-

Trustworthy AI: newer GPUs add TEE-like features; combined with CPU confidential VMs, they help keep models and data private during training and inference.

Why not only cryptography? Pure cryptographic methods like Fully Homomorphic Encryption (FHE) and Secure Multiparty Computation (MPC) protect data too, but often with high cost or big changes to code. TEEs usually run today’s software faster and with fewer changes while still improving privacy.

Important limits

No tool is perfect. Know these limits up front:

-

Not a silver bullet: a TEE protects what happens inside. If malware changes inputs before they enter, or tampers with outputs after they leave, the TEE cannot detect it. A TEE could sign the wrong transaction if the data was altered upstream.

-

Physical attacks: very skilled attackers with hands-on access to the chip can still try invasive methods.

-

Side-channels: CPU flaws like Spectre and Meltdown showed that timing and cache patterns can leak hints. Vendors add defenses, but risk is not zero.

-

Vendor trust: designs come from Intel (SGX, TDX), AMD (SEV), and ARM (CCA). You must trust their hardware and updates.

-

Verification gap: attestation proves “this binary is running,” but not that it matches the public source code without extra steps.

How to reduce risk

Use TEEs as part of a layered plan:

-

Design for failure: assume a breach is possible. Prefer TEEs to protect privacy and limit damage, not as your only guard for system integrity.

-

Protect access patterns: use Oblivious RAM (ORAM) to hide which memory locations are touched, reducing what observers can infer.

-

Rotate keys: use short-lived session keys and regular rotation so any leak has a small blast radius

-

Close the build gap: build and verify software inside a TEE so you can link public source to the exact binary that later runs. Example: dstack’s “In-ConfidentialContainer (In-CC) Build” claims to provide this end-to-end path [clarify: confirm vendor name and feature name].

Takeaway

A TEE brings a safe room to your device or cloud VM. It keeps data private and code intact during execution. It does not remove the need for secure inputs, careful outputs, and good key hygiene. Combined with layered defenses and clear verification, TEEs are a practical foundation for confidential computing across phones, clouds, IoT, blockchains, wallets, and AI workloads.

please login with NEAR

Updated: February 18, 2026

Top comment

That great movement to security

🧑 100% userOk Good

🧑 100% userThe encryption part for anonymity is interesting. Keep building.

🧑 100% userThis is really good and it is noted

🧑 100% userThanks for sharing

🧑 100% userGreat

🧑 100% user