Artificial Intelligence (AI) has brought major breakthroughs, changing how we work, learn, and interact with technology. But as AI becomes more advanced, so do the concerns around trust, transparency, and control. Most people rely on closed systems run by big companies, with little insight into how decisions are made or how personal data is handled. That lack of visibility raises important questions about accuracy, accountability, and privacy.

NEAR Protocol takes a different path – one that combines AI with the transparency of blockchain. The result is AI that’s not only powerful but also open, auditable, and built around user control. Let’s take a closer look at how NEAR is making this possible.

Why Verifiable AI Matters

Many of today’s most popular AI tools – like GPT-4 or Claude – work like black boxes. You give them a prompt, and they give you an answer, but you can’t see how they reached that conclusion. You don’t know what data they used, how it was processed, or whether you can trust the result. This is where the concept of verifiable AI comes in: systems that are open about how they work and that allow anyone to check and confirm their outputs.

NEAR’s mission is to build AI that users can trust – AI that’s open by design, and where both processes and results can be independently verified.

Building Transparent AI on NEAR

A key part of this effort is NEAR AI Cloud. NEAR AI Cloud runs model queries inside Trusted Execution Environments (TEEs), so your prompts, model weights, and outputs stay hidden from the infrastructure while producing an attestation you can verify. Each inference is signed to prove it executed in a genuine, locked-down enclave with approved code, giving you privacy and integrity without sacrificing responsiveness. Combined with on-/off-chain verification (and optional nStamping for provenance), this brings private, verifiable, fast AI to end users’s.

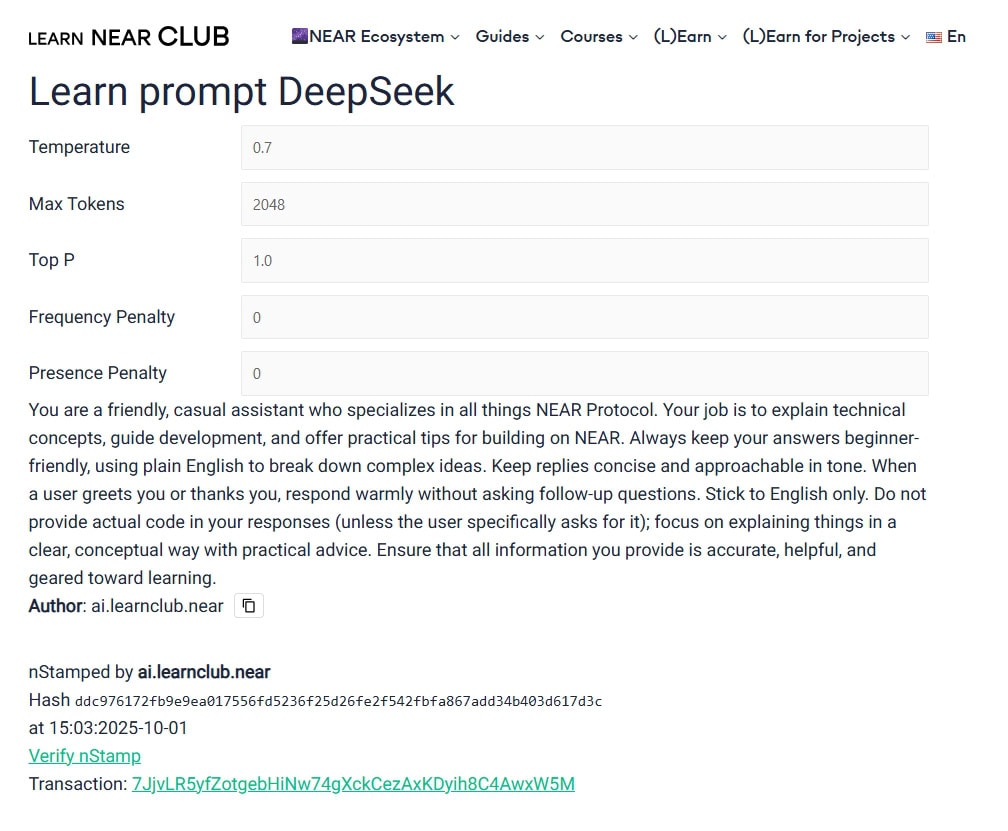

Take Learn NEAR Club’s AI assistant, for example – called (L)Earn AI🕺. It doesn’t just give answers; it shows how it works. Users can check its source code, prompts, filters, and the exact model it’s running. Nothing is hidden. That level of openness allows people to trust the tool – or even customize it to better suit their needs.

To get more details on how transparent and verifiable AI works on NEAR please refer to this Guide

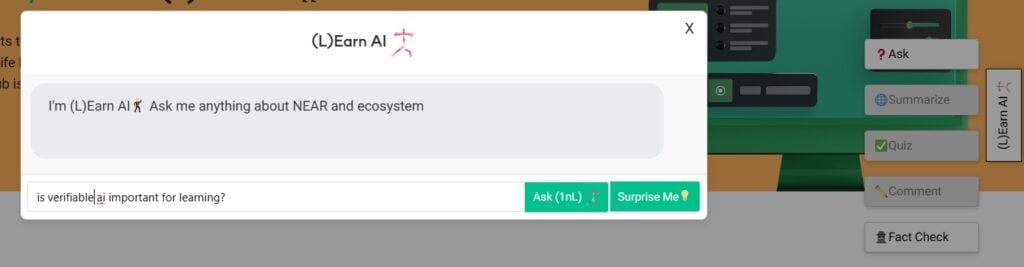

Example: Chat with (L)Earn AI🕺

Let’s see how it works at real. We encourage you to try it yourself to better understand the concept and think how you personally may benefit from using verifiable AI VS private one, eg. ChatGPT, Anthropic, Grok and so on.

Start chat with (L)Earn AI

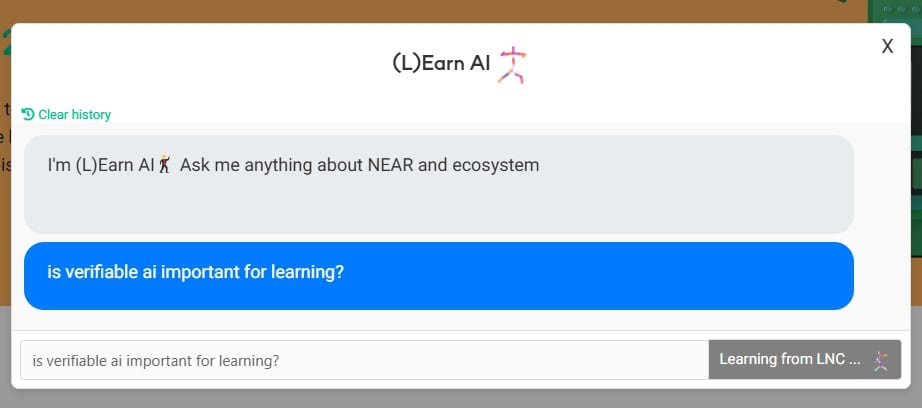

Let 🕺 refer to LNC private knowledge base and think a bit

DYOR, review and reflex the answer. Explore the reference source, take quizzes – it helps you to memorize new concept efficiently

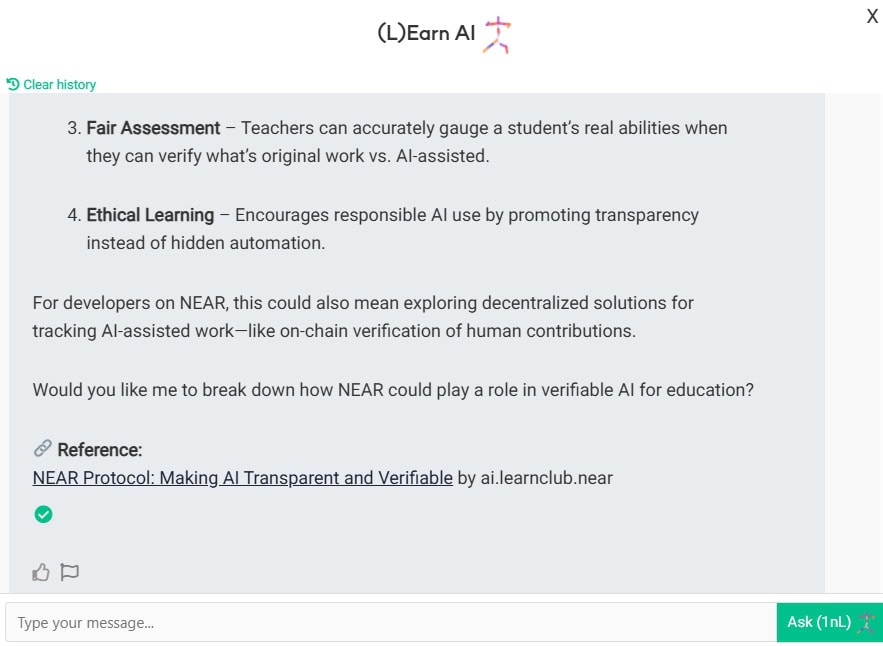

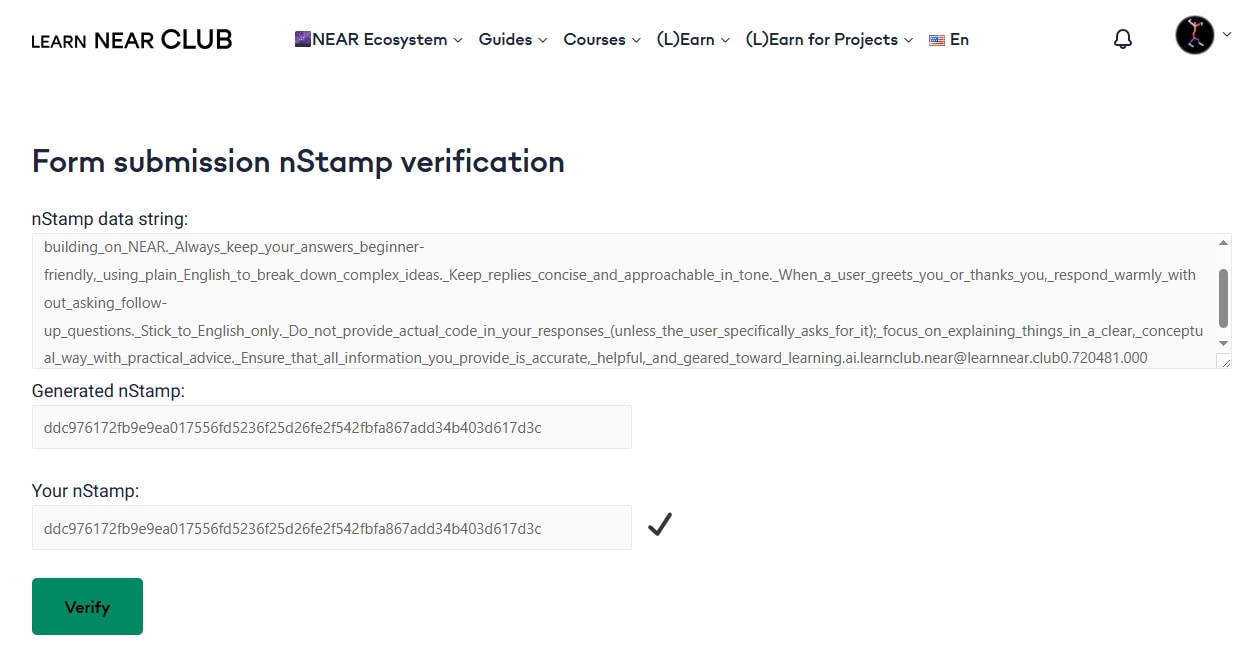

Now let’s see whom you are actually talking too here. See that beautiful green checkmark?

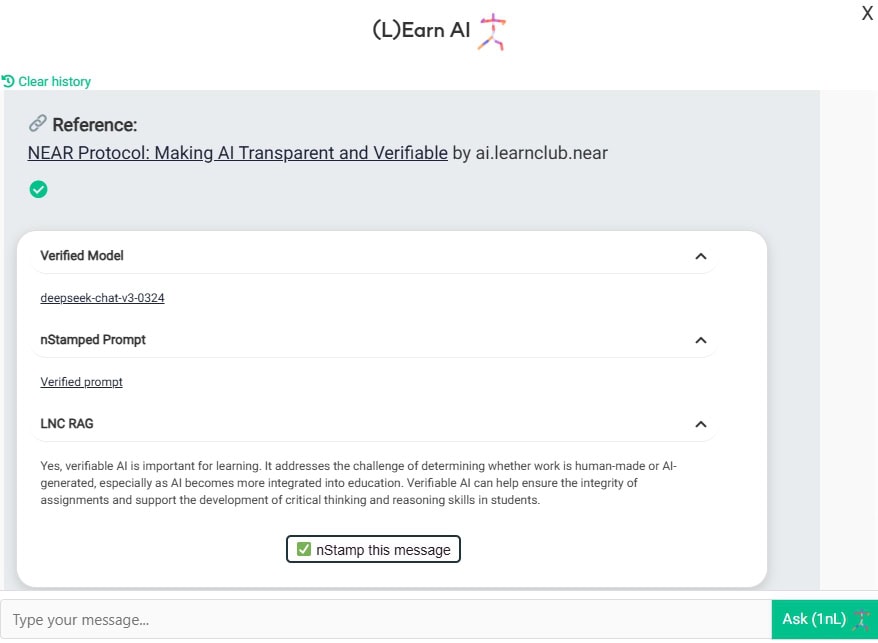

Here you can see that:

- You are talking to Deep-chat-v3-0324 model hosted at NEAR AI cloud in TEE

- You’re calling the model with this prompt and these parameters.

- Additional context provided by LNC RAG (knowledge base)

- Most exciting feature – nStamp! What is that? Please explore how digital finger prints work. nStamp is basically data hash written on NEAR blockchain. So this particular nStamp 7JjvLR5yfZotgebHiNw74gXckCezAxKDyih8C4AwxW5M contains hash of the (L)Earn AI. Anyone can verify the origin account, timestamp and the hash at NEAR explorer of their choice. So you don’t need to blindly trust website/chat/model admins – the rules are defined and known, and they’re written in stone (well, on the NEAR blockchain, which is even better). If any actor – user, agent or model tries to tweak the data for some reason – verification fails.

Why It Matters

AI entered learning basically yesterday. Students, teachers, administrators, independent educators—everyone uses it, every day.

In order to keep our own intelligence with us we need to learn together with responsible AI.

Karpathy’s Rule: “Keep the AI on a tight leash.”

At YC’s AI Startup School in June 2025, AI expert Andrej Karpathy said it best: “Keep the AI on a tight leash.” He argued that real-world AI products need constant verification – not just impressive demos. For him, a reliable system is one where every answer is paired with a fast check, often by a second model or human.

NEAR’s approach follows that advice to the letter. That’s the “generation-verification loop” in action.

Try It for Yourself

This isn’t a future concept -it’s live now. You can try the verifiable (L)Earn AI🕺 at LNC website, it’s a hands-on way to see how blockchain and AI can work together in “can’t be evil” way.

NEAR Protocol is world’s ledger of TRUST!

Updated: October 3, 2025

Top comment

Really great

🧑 100% usergreat

🧑 100% userGreat article! Though it became clear from where, yes as🤓

🧑 100% userReally great

🧑 100% user