Why AI Needs Better Privacy

Artificial Intelligence is moving very fast. But many people and organizations still cannot use their most valuable data with AI. To use most AI tools today, you must send your text, documents, or records to computers that you do not own and cannot inspect. You have to trust company policies, legal contracts, and marketing promises. These forms of trust can still fail.

So the big question is: how can we unlock the full power of AI, without giving up privacy and control over our data?

The NEAR AI Approach: Privacy You Can Verify

NEAR AI was created to close this “AI privacy gap”. Its products are built on two main ideas: verifiable privacy and user-owned AI.

Verifiable privacy means you do not just trust what a company says. Instead, you can check cryptographic proof that your data stayed private and secure during the whole AI process. User-owned AI means that you stay in control of how your data and AI models are used.

This design follows NEAR Protocol’s vision that people should truly own their AI and safely bring important data into AI systems.

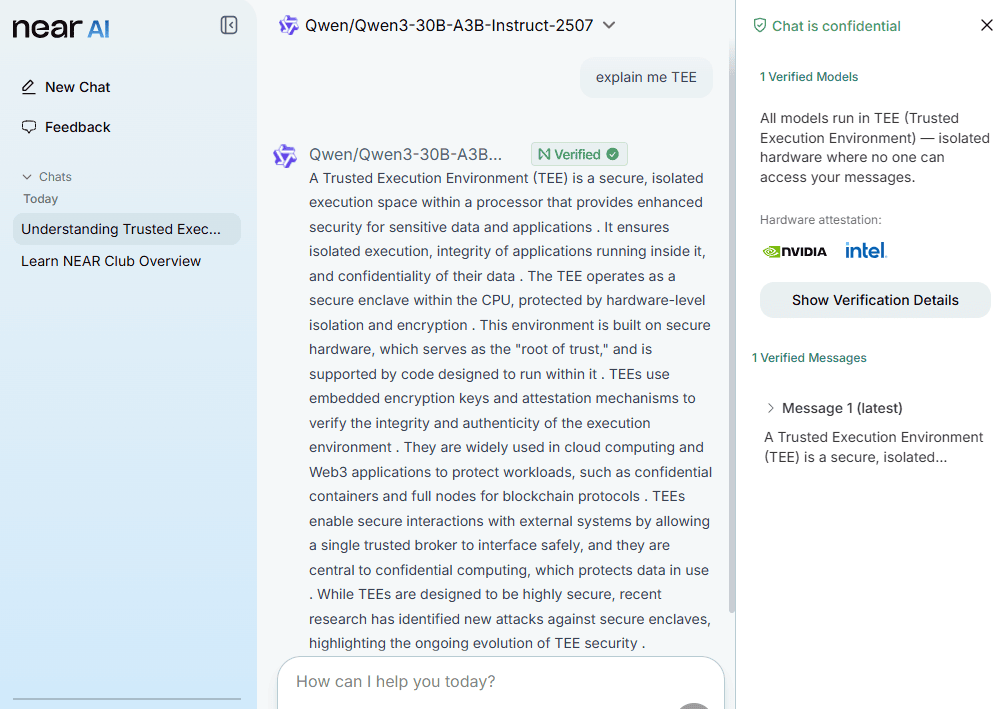

Trusted Execution Environments: A “Vault” for Your Data

The core technology behind NEAR AI is a special kind of hardware area called a Trusted Execution Environment (TEE). NEAR AI uses TEEs from companies like Intel and NVIDIA as part of their confidential computing tools.

You can imagine a TEE as a locked, sealed vault inside a computer’s processor. Data goes into this vault, is used by an AI model, and then leaves again, but nobody outside the vault can see what happens inside. Not the cloud provider, not the server operator, and not even NEAR AI.

Here is how a private AI interaction works with NEAR AI:

-

Encrypt: Your prompt or data is encrypted on your device before it is sent to a secure virtual machine inside the TEE.

-

Isolate: Inside this protected “enclave”, the AI model decrypts your data, runs the inference (the process of generating an answer), and then re-encrypts the result.

-

Verify: You decrypt the response locally. You also get a cryptographic proof showing that the computation was done on genuine, unmodified hardware using the expected code.

This gives end-to-end privacy that you can independently verify, instead of just believing a promise.

Two Products: NEAR AI Cloud and NEAR Private Chat

NEAR AI uses this technology in two different products, each for a different audience and use case:

-

NEAR AI Cloud is designed for developers, enterprises, and governments.

-

NEAR Private Chat is designed for everyday users.

|

Feature

|

NEAR AI Cloud

|

NEAR Private Chat

|

|

Primary Audience

|

Developers, Enterprises, and Government

|

Everyday users

|

|

Core Purpose

|

To deploy private AI inference for sensitive workloads and proprietary data

|

To have private, everyday AI conversations with the peace of mind you deserve

|

|

How to Use

|

Via an OpenAI-compatible API that integrates directly into applications and services

|

Through a familiar and easy-to-use chat interface

|

Both products are built on the same privacy-first foundation, but they offer different ways to interact with AI.

NEAR AI Cloud: Private AI for Sensitive Workloads

NEAR AI Cloud is a platform for running AI that handles sensitive or regulated data, such as customer information or proprietary business logic.

Its main benefits are:

-

Fast private deployment: Developers can add private AI to their apps in minutes, using a single API that is compatible with existing OpenAI-style tools.

-

Built-in data and IP protection: Every request runs inside a TEE. This protects personal data and intellectual property by design and reduces the need to manage many separate security tools.

-

Flexibility without lock-in: Teams can switch between different AI models or scale their usage up and down without changing their code.

NEAR AI Cloud is already being used in production by partners like Brave Nightly, OpenMind, Phala and Learn NEAR Club for privacy-critical applications.

NEAR Private Chat: Everyday Conversations, Kept Private

NEAR Private Chat brings the same strong privacy guarantees to normal, daily AI chats. You can ask about money, health, work, or relationships while knowing that your data is not used to train models, is not mined for ads, and is not visible to providers.

Its privacy model is built on four simple principles:

-

Private: The AI models run inside TEEs.

-

Secure: Data inside a TEE cannot be read by the host system or other apps.

-

Verifiable: Users can check cryptographic proofs that the hardware and code are genuine and untampered.

-

Yours: Your data stays yours. It is not visible to model providers, cloud providers, or NEAR.

Getting Started with NEAR AI

If you are a developer:

-

Explore the full documentation at docs.near.ai.

-

Review the code and examples on GitHub at github.com/nearai.

If you are an everyday user:

-

Try NEAR Private Chat at private.near.ai/welcome and experience verifiable privacy in practice.

Takeaway

NEAR AI shows that we do not have to choose between powerful AI and strong privacy. By combining cryptography, secure hardware, and clear design, it makes it possible to use sensitive data with AI while keeping control in the hands of users and organizations. Verifiable privacy turns “trust us” into “check for yourself” and opens the door to safer, more confident use of AI everywhere.

Reflective questions

-

When you use AI tools today, what kinds of data do you currently avoid sharing, and why?

-

How could verifiable privacy change the way your school, workplace, or business uses AI?

-

In your own words, how would you explain a Trusted Execution Environment to a friend?

Updated: December 3, 2025

Top comment

So far so good

🧑 100% userGood job

🧑 100% userGreat

🧑 100% userGreat.From now on, private AI chats with zero data leaks and fully verifiable too!

🧑 100% userGreat application.

🧑 100% userGreat application.

🧑 100% user